Investigation on why database doesn’t start after successfully dropping a diskgroup

Posted by Kamran Agayev A. on December 24th, 2020

Few months ago, while performing storage migration I faced an interesting issue which could lead to potential downtime if I didn’t notice a hidden warning in the log file.

The plan was to create a new ASM diskgroup in a normal redundancy with 2 disks from different storages and test the disk crash and confirm that there will be no data loss if one of the storages fail. After creating a diskgroup, creating a test tablespaces on it and corrupting the header of one disks, everything was ok and we decided to drop the diskgroup and start adding new disks as a failgroup to other diskgroups.

Below I created a scenario in my test environment which describes the same problem.

- First of all, I get location of controlfiles and datafiles (of course redo log files as well) to make sure which diskgroups contain physical files:

SQL> show parameter control

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

control_files string +CFILE2/TESTDB/CONTROLFILE/current.256.1046097231

SQL> select name from v$datafile;

NAME

--------------------------------------------------------------------------------

+DATA/TESTDB/DATAFILE/system.278.1046088963

+DATA/TESTDB/DATAFILE/sysaux.277.1046088795

+DATA/TESTDB/DATAFILE/undotbs1.280.1046089213

+DATA/TESTDB/DATAFILE/undotbs2.288.1046089391

+DATA/TESTDB/DATAFILE/users.279.1046089209

SQL>

As you see, we have 2 diskgroups involved: +CFILE2 and +DATA. Next, I run srvctl config database command and grep list of Diskgroups which are used by this database. We see the same output – +CFILE2 and +DATA

-bash-4.1$ srvctl config database -d testdb | grep Disk

Disk Groups: DATA,CFILE2

-bash-4.1$

- Next, I query V$ASM_DISKGROUP view to get list of all diskgroups that are available in ASM:

SQL> col name format a40

SQL> set linesize 150

SQL> select group_number, name, state, type, total_mb, free_mb from v$asm_diskgroup;

GROUP_NUMBER NAME STATE TYPE TOTAL_MB FREE_MB

------------ ---------------------------------------- ----------- ------ ---------- ----------

4 TESTDG MOUNTED EXTERN 1019 923

3 DATA CONNECTED EXTERN 15342 8239

1 CFILE2 CONNECTED EXTERN 1019 892

SQL>

- We have three diskroups – +CFILE2, +DATA and +TESTDG. Next, I will create a new tablespace in the diskgroup +TESTDG to have it become a part of the database configuration:

SQL> create tablespace mytbs datafile '+TESTDG' size 10m;

Tablespace created.

SQL>

- Once I create a tablespace in the new diskgroup, it will be part of the database configuration and dependency is established between the database and the diskgroup which can be seen from the output of the alert.log file of the database:

Alert.log file

Wed Jul 29 09:02:47 2020

create tablespace mytbs datafile '+TESTDG' size 10m

Wed Jul 29 09:02:48 2020

NOTE: ASMB mounting group 4 (TESTDG)

NOTE: Assigning number (4,0) to disk (/dev/asm-disk5)

SUCCESS: mounted group 4 (TESTDG)

NOTE: grp 4 disk 0: TESTDG_0000 path:/dev/asm-disk5

Wed Jul 29 09:02:50 2020

NOTE: dependency between database testdb and diskgroup resource ora.TESTDG.dg is established

Completed: create tablespace mytbs datafile '+TESTDG' size 10m

- Output of the ASM alert.log file:

Wed Jul 29 09:02:48 2020

NOTE: client testdb1:testdb:rac-scan mounted group 4 (TESTDG)

Wed Jul 29 09:02:49 2020

NOTE: Advanced to new COD format for group TESTDG

- From the output of the crsd.trc file it can be seen that there’s a hard dependency between diskgroup and the database:

2020-07-29 09:02:50.015412 :UiServer:204928768: {1:32997:407} Container [ Name: UI_REGISTER

API_HDR_VER:

TextMessage[3]

API_REGUPDATE_TAG:

TextMessage[1]

ASYNC_TAG:

TextMessage[1]

ATTR_LIST:

TextMessage[MANAGEMENT_POLICY=AUTOMATICSTART_DEPENDENCIES=+hard(ora.TESTDG.dg)+pullup(ora.TESTDG.dg)STOP_DEPENDENCIES=+hard(shutdown:ora.TESTDG.dg)]

CLIENT:

TextMessage[]

CLIENT_NAME:

TextMessage[Unknown process]

CLIENT_PID:

TextMessage[8543]

CLIENT_PRIMARY_GROUP:

TextMessage[oinstall]

LOCALE:

TextMessage[AMERICAN_AMERICA.AL32UTF8]

NO_WAIT_TAG:

TextMessage[1]

QUEUE_TAG:

TextMessage[1]

RESOURCE:

TextMessage[ora.testdb.db]

UPDATE_TAG:

TextMessage[1]

]

– Now to see the new list of diskgroups which are part of the database configuration, we run the following command:

-bash-4.1$ srvctl config database -d testdb | grep Disk

Disk Groups: DATA,CFILE2,TESTDG

-bash-4.1$

As you see, diskgroup +TESTDG is also part of the database configuration. Next, to imitate a storage failure, or disk crash, I corrupt the disk of the diskgroup +TESTDG using dd command as follows:

-bash-4.1$ dd if=/dev/zero of=/dev/asm-disk5 bs=1024 count=10000

10000+0 records in

10000+0 records out

10240000 bytes (10 MB) copied, 0.125557 s, 81.6 MB/s

-bash-4.1$

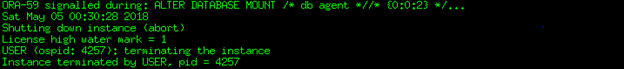

– And check the alert.log file. Once it’s detected that the disk of the diskgroup with external redundancy is corrupted, database instance will crash:

Wed Jul 29 09:19:45 2020

USER (ospid: 27939): terminating the instance

Wed Jul 29 09:19:47 2020

Instance terminated by USER, pid = 27939

- And from the alert.log file of an ASM instance, it can be seen that the disk is offlined:

Wed Jul 29 09:19:49 2020

NOTE: SMON did instance recovery for group DATA domain 3

NOTE: SMON detected lock domain 4 invalid at system inc 6 07/29/20 09:19:49

NOTE: SMON starting instance recovery of group TESTDG domain 4 inc 6 (mounted) at 07/29/20 09:19:49

NOTE: SMON will attempt offline of disk 0 - no header

NOTE: cache initiating offline of disk 0 group TESTDG

NOTE: process _smon_+asm1 (5245) initiating offline of disk 0.3916011317 (TESTDG_0000) with mask 0x7e in group 4 (TESTDG) with client assisting

NOTE: initiating PST update: grp 4 (TESTDG), dsk = 0/0xe9699735, mask = 0x6a, op = clear

Wed Jul 29 09:19:49 2020

GMON updating disk modes for group 4 at 14 for pid 18, osid 5245

ERROR: disk 0(TESTDG_0000) in group 4(TESTDG) cannot be offlined because the disk group has external redundancy.

Wed Jul 29 09:19:49 2020

ERROR: too many offline disks in PST (grp 4)

- Now, we try to start the database

-bash-4.1$ srvctl start database -d testdb

PRCR-1079 : Failed to start resource ora.testdb.db

CRS-5017: The resource action "ora.testdb.db start" encountered the following error:

ORA-01157: cannot identify/lock data file 2 - see DBWR trace file

ORA-01110: data file 2: '+TESTDG/TESTDB/DATAFILE/mytbs.256.1047027769'

. For details refer to "(:CLSN00107:)" in "/u01/app/oracle/diag/crs/node1/crs/trace/crsd_oraagent_oracle.trc".

It will fail. Because it can’t access the datafile which is in the failed diskgroup. Here’s the output of the trace file:

CRS-2674: Start of 'ora.testdb.db' on 'node1' failed

CRS-2632: There are no more servers to try to place resource 'ora.testdb.db' on that would satisfy its placement policy

CRS-5017: The resource action "ora.testdb.db start" encountered the following error:

ORA-01157: cannot identify/lock data file 2 - see DBWR trace file

ORA-01110: data file 2: '+TESTDG/TESTDB/DATAFILE/mytbs.256.1047027769'

. For details refer to "(:CLSN00107:)" in "/u01/app/oracle/diag/crs/node2/crs/trace/crsd_oraagent_oracle.trc".

CRS-2674: Start of 'ora.testdb.db' on 'node2' failed

- Output of alert.log file:

Wed Jul 29 09:22:15 2020

Errors in file /u01/app/oracle/diag/rdbms/testdb/testdb1/trace/testdb1_ora_28674.trc:

ORA-01157: cannot identify/lock data file 2 - see DBWR trace file

ORA-01110: data file 2: '+TESTDG/TESTDB/DATAFILE/mytbs.256.1047027769'

ORA-1157 signalled during: ALTER DATABASE OPEN /* db agent *//* {1:32997:676} */...

Wed Jul 29 09:22:17 2020

License high water mark = 1

Wed Jul 29 09:22:17 2020

USER (ospid: 28854): terminating the instance

Wed Jul 29 09:22:18 2020

Instance terminated by USER, pid = 28854

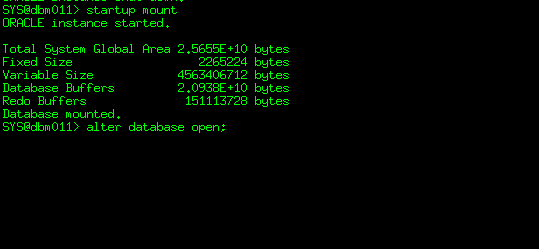

- Next, we offline the datafile and restart the database:

SQL> alter database datafile 2 offline;

Database altered.

SQL>

-bash-4.1$ srvctl stop database -d testdb -stopoption abort

-bash-4.1$ srvctl start database -d testdb

Database is UP! Great! But ….. We solved the physical file dependency problem which was preventing database to start. But we still have the failed diskgroup in the configuration of the database resource:

-bash-4.1$ srvctl config database -d testdb | grep Disk

Disk Groups: DATA,CFILE2,TESTDG

-bash-4.1$

It means that once we restart the clusterware stack, the database resource will NOT start, because it has hard dependency with the diskgroup which is part of its configuration, which is FAILED …

Let’s restart the crs and check the status of the database:

-bash-4.1# crsctl stop crs

-bash-4.1# crsctl start crs

- From the output of the ASM alert.log file, it can be seen that ASM tried to mount the diskgroup and failed:

Wed Jul 29 09:41:09 2020

ERROR: ALTER DISKGROUP TESTDG MOUNT /* asm agent *//* {1:42096:2} */

Wed Jul 29 09:41:09 2020

WARNING: Disk Group DATA containing voting files is not mounted

ORA-15032: not all alterations performed

ORA-15017: diskgroup "TESTDG" cannot be mounted

ORA-15040: diskgroup is incomplete

ORA-15017: diskgroup "DATA" cannot be mounted

ORA-15013: diskgroup "DATA" is already mounted

- CRS is up

[root@node1 oracle]# crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

[root@node1 oracle]#

- As we restarted crs in the first node, the instance is not running in the first node, and still up in the second node which will be down upon the next crs or node restart.

[root@node1 oracle]# srvctl status database -d testdb

Instance testdb1 is not running on node node1

Instance testdb2 is running on node node2

[root@node1 oracle]#

- If we try to restart the instance in the first node, we’ll fail:

-bash-4.1$ srvctl start instance -d testdb -i testdb1

PRCR-1013 : Failed to start resource ora.testdb.db

PRCR-1064 : Failed to start resource ora.testdb.db on node node1

CRS-2674: Start of 'ora.TESTDG.dg' on 'node1' failed

-bash-4.1$

A message appered in asm trace file once you try to start the instance

Wed Jul 29 09:44:28 2020

ERROR: ALTER DISKGROUP ALL MOUNT FOR testdb /* asm agent *//* {1:42096:192} *//* incarnation::1*/

It’s scary! You have a failed diskgroup which doesn’t contain ANY physical file in it, and it will stop you to start the database instance because the database resource is dependent on it. The only way is to modify the database resource configuration and remove the diskgroup as follows:

-bash-4.1$ srvctl modify database -d testdb -diskgroup DATA,CFILE2

- Now if we check the crsd.log file, we can see that we have only two diskgroups : + DATA and CFILE2 with hard dependency

2020-07-29 09:46:09.329870 :UiServer:2822174464: {1:42096:285} Container [ Name: UI_REGISTER

API_HDR_VER:

TextMessage[3]

API_REGUPDATE_TAG:

TextMessage[1]

ATTR_LIST:

TextMessage[START_DEPENDENCIES=hard(ora.DATA.dg,ora.CFILE2.dg) weak(type:ora.listener.type,global:type:ora.scan_listener.type,uniform:ora.ons,global:ora.gns) pullup(ora.DATA.dg,ora.CFILE2.dg)STOP_DEPENDENCIES=hard(intermediate:ora.asm,shutdown:ora.DATA.dg,shutdown:ora.CFILE2.dg)]

CLIENT:

TextMessage[]

CLIENT_NAME:

TextMessage[/usr/bin/java]

CLIENT_PID:

TextMessage[13981]

CLIENT_PRIMARY_GROUP:

TextMessage[oinstall]

LOCALE:

TextMessage[AMERICAN_AMERICA.US7ASCII]

QUEUE_TAG:

TextMessage[1]

RESOURCE:

TextMessage[ora.testdb.db]

UPDATE_TAG:

TextMessage[1]

]

To make sure it’s successfully modified, run the following command and check the output:

-bash-4.1$ srvctl config database -d testdb | grep Disk

Disk Groups: DATA,CFILE2

– Now we should be able to start the instance:

-bash-4.1$ srvctl start instance -d testdb -i testdb1

-bash-4.1$

- Output of the alert.log file

Wed Jul 29 09:47:30 2020

AQPC started with pid=55, OS id=14549

Starting background process CJQ0

Completed: ALTER DATABASE OPEN /* db agent *//* {1:42096:364} */

What I faced that night, was that the diskgroup was successfully dropped from ASMCA, but in the crsd.log file the hard dependency was not removed from the clusterware configuration, and I decided to not restart the crs, thinking it will not startup because of this dependency. Diskgroup was already empty containing no physical datafiles, dismounted and dropped successfully but it’s hard dependency from the database resource was not changed, probably because of a bug. Which means that after dropping the diskgroup if we tried to reboot both nodes or crs, the database wouldn’t start and would lead the downtime.

Lessons learned:

- Make sure to check alert.log file of database and asm instance, and cluster log and trace files once you perform any change (even dropping a diskgroup in the production environment and even if it succeeded)

- After making a cluster level change, make sure to restart the crs or even perform a node reboot to see everything is ok after the change.

- Don’t stop the entire database. Restart the crs or db instances in rolling fashion. Make sure you have at least once instance available every time.

Posted in RAC issues | No Comments »

Then the second guy came in and said don’t try this

Then the second guy came in and said don’t try this